在UWP应用中加入Cortana语音指令集

本文介绍小娜语音指令集的使用场景,如何将UWP应用接入小娜的语音指令集,使用户直接通过小娜启动应用并使用应用中 一些轻量级的功能。文中以必应词典作为实例讲解必应词典UWP版本是如何接入小娜语音功能的。

小娜算得上是Windows 10一大卖点,跟邻居家的Google Now和Siri比起来叫好声也更多。除了经典开始菜单的“回归”,UWP通用应用架构,营销人员口中三句话不离的,自然还有微软小娜。其实微软小娜是具有第三方应用整合能力的,而且隐隐可见微软让小娜平台化的意图,所以小娜的入口自然也就成了开发者的兵家必整之地了。

使用情景

目前小娜开放给第三方应用的接口主要是语音指令集(Voice Command Definitions)。

现来看看VCD到底能做些什么。VCD的使用场景概括说来有两种:

第一种是利用第三方应用本身的数据能力,在用户输入语音指令或文字指令后,在小娜的界面内显示由第三方应用提供的一些数据,完成一些轻量级功能。比如提供一些文字、信息。

第二种是将用户输入的语音指令或文字中的信息,作为第三方应用的启动参数,在应用打开后直接跳转到相应的功能页面,缩短导航的路径长度。比如对小娜说“在大众点评中查找附近吃烤鱼的饭馆”,小娜将会打开大众点评,直接跳转到能吃烤鱼的附近的餐厅。这里小娜为用户省去了打开应用,打开查找页,搜索附近吃烤鱼的餐厅这几步。

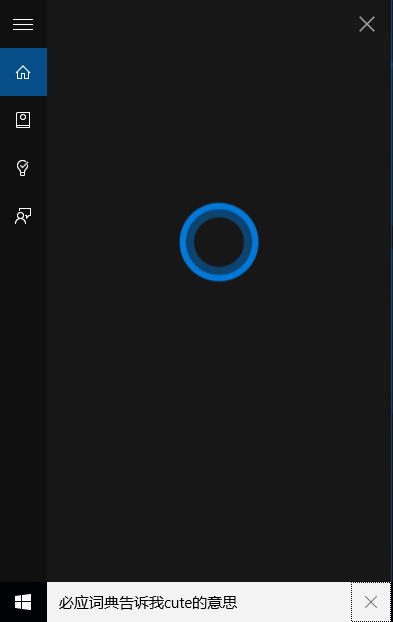

在新版本的必应词典中,主要用到的是第一种情景。我们先来看一看整个体验长啥样:

用户对着小娜说:“必应词典,告诉我cute是什么意思?” 小娜理解以后跟必应词典沟通,取回cute的意思,并显示出来

开发实现

要实现这些,有两个关键部分,第一个是定义语法。

小娜VCD的语法文件是一个xml格式的文件。先来看看官方的文档以及官文的实例代码:

https://msdn.microsoft.com/en-us/library/windows/apps/dn706593.aspx

https://github.com/Microsoft/Windows-universal-samples/tree/master/Samples/CortanaVoiceCommand

我在这里重点介绍必应词典的VCD实现。下面是必应词典VCD的语法文件:

<?xml version="1.0" encoding="utf-8" ?>

<VoiceCommands xmlns="http://schemas.microsoft.com/voicecommands/1.2">

<CommandSet xml:lang="zh-hans-cn" Name="DictCommandSet_zh-cn">

<AppName> 必应词典 </AppName>

<Example> 翻译一下 friend </Example>

<Command Name="searchWord">

<Example> 翻译一下 friend </Example>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}[告诉我]{query}的意思 </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}[告诉我]{query}[是]什么意思 </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}{query}[用][英语][英文]怎么说 </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}[英语][英文]{query}怎么说 </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}{query}用[汉语][中文]怎么说 </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}什么是{query}</ListenFor>

<Feedback>正在查询{query}的释义...</Feedback>

<VoiceCommandService Target="DictVoiceCommandService"/>

</Command>

<Command Name="translate">

<Example> 翻译一下 friend</Example>

<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}翻译[一下][单词]{query}</ListenFor>

<Feedback>正在翻译{query}...</Feedback>

<VoiceCommandService Target="DictVoiceCommandService"/>

</Command>

<PhraseTopic Label="query" Scenario="Search">

<Subject> Words </Subject>

</PhraseTopic>

</CommandSet>

</VoiceCommands>

VCD中的语法是区分语言的,每个Cortana语言,都是一个CommandSet。对于中文来说,是zh-CN或zh-hans-CN。每一个CommandSet要求一个AppName。理论上这个AppName的名称是可以自定义的,未必非要与应用一模一样。比如我们的应用全名叫“必应词典Win10版”,如果用户需要说:“必应词典Win10版告诉我cute是什么意思?”估计用户会崩溃。不过取应用名的时候还是要稍微讲究一点,一来是用户用着方便,二来如果名字起得太常见可能会跟其它应用产生歧义,也可能有因为破坏了小娜自身的一些功能而被用户卸载的风险。

在ListenFor语句中,[]表示可选字,{}表示特殊字。一句ListenFor中,不能全部由可选字组成,否则就像正则表达式中的.或*一样,无法匹配了。{builtin:AppName} 是应用名字出现的位置,应用的名字可以出现在一句话的开头,也可以在其它位置。

例如:<ListenFor RequireAppName="ExplicitlySpecified"> {builtin:AppName}{query}[用][英语][英文]怎么说 </ListenFor>

对于这 句语法,“必应词典xxx怎么说”,“必应词典xxx用英语怎么说”,“必应词典xxx用英文怎么说”,“必应词典xxx英语怎么说”,等等都是可识别的。

正如msdn所述,PhraseTopic可表示任意词,subject和scenario用来辅助语言识别模型更准确的识别语音输入。枚举类型可以通过msdn查到。

在应用的App.xaml.cs文件中,需要把写好的文件在应用启动时装载进Cortana。

protected async override void OnLaunched(LaunchActivatedEventArgs e)

{

…

InstallVoiceCommand();

…

}

private async Task InstallVoiceCommand()

{

try

{

//user can stop VCD in settings

if (AppSettings.GetInstance().CortanaVCDEnableStatus == false)

return;

// Install the main VCD. Since there's no simple way to test that the VCD has been imported, or that it's your most recent

// version, it's not unreasonable to do this upon app load.

StorageFile vcdStorageFile = await Package.Current.InstalledLocation.GetFileAsync(@"DictVoiceCommands.xml");

await Windows.ApplicationModel.VoiceCommands.VoiceCommandDefinitionManager.InstallCommandDefinitionsFromStorageFileAsync(vcdStorageFile);

}

catch (Exception ex)

{

System.Diagnostics.Debug.WriteLine("Installing Voice Commands Failed: " + ex.ToString());

}

}

第二个重要的部分是语音应用服务(app service)

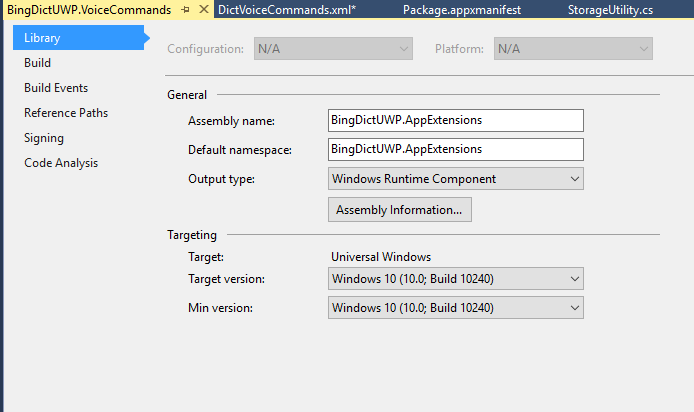

仿照msdn的sample,必应词典也在解决方案中建立了一个BingDictUWP.VoiceCommands工程。需要注意的是,这个工程的output type必须是Windows Runtime Component。否则backgroundtask将不工作。如下图:

对于backgroundtask这个项目,大家仍然可以从github上下载刚才分享的链接里的项目。大体框架可以直接用那个sample,自己在相应位置做一些修改。

以下是必应词典用来处理小娜发回的语音指令的代码

namespace BingDictUWP.AppExtensions

{

/// <summary>

/// The VoiceCommandService implements the entrypoint for all headless voice commands

/// invoked via Cortana. The individual commands supported are described in the

/// AdventureworksCommands.xml VCD file in the AdventureWorks project. The service

/// entrypoint is defined in the Package Manifest (See section uap:Extension in

/// AdventureWorks:Package.appxmanifest)

/// </summary>

public sealed class DictVoiceCommandService : IBackgroundTask

{

...

/// <summary>

/// Background task entrypoint. Voice Commands using the <VoiceCommandService Target="...">

/// tag will invoke this when they are recognized by Cortana, passing along details of the

/// invocation.

///

/// Background tasks must respond to activation by Cortana within 0.5 seconds, and must

/// report progress to Cortana every 5 seconds (unless Cortana is waiting for user

/// input). There is no execution time limit on the background task managed by Cortana,

/// but developers should use plmdebug (https://msdn.microsoft.com/en-us/library/windows/hardware/jj680085%28v=vs.85%29.aspx)

/// on the Cortana app package in order to prevent Cortana timing out the task during

/// debugging.

///

/// Cortana dismisses its UI if it loses focus. This will cause it to terminate the background

/// task, even if the background task is being debugged. Use of Remote Debugging is recommended

/// in order to debug background task behaviors. In order to debug background tasks, open the

/// project properties for the app package (not the background task project), and enable

/// Debug -> "Do not launch, but debug my code when it starts". Alternatively, add a long

/// initial progress screen, and attach to the background task process while it executes.

/// </summary>

/// <param name="taskInstance">Connection to the hosting background service process.</param>

public async void Run(IBackgroundTaskInstance taskInstance)

{

mServiceDeferral = taskInstance.GetDeferral();

// Register to receive an event if Cortana dismisses the background task. This will

// occur if the task takes too long to respond, or if Cortana's UI is dismissed.

// Any pending operations should be cancelled or waited on to clean up where possible.

taskInstance.Canceled += OnTaskCanceled;

var triggerDetails = taskInstance.TriggerDetails as AppServiceTriggerDetails;

// Load localized resources for strings sent to Cortana to be displayed to the user.

mCortanaResourceMap = ResourceManager.Current.MainResourceMap.GetSubtree("Resources");

// Select the system language, which is what Cortana should be running as.

mCortanaContext = ResourceContext.GetForViewIndependentUse();

var lang = Windows.Media.SpeechRecognition.SpeechRecognizer.SystemSpeechLanguage.LanguageTag;

mCortanaContext.Languages = new string[] { Windows.Media.SpeechRecognition.SpeechRecognizer.SystemSpeechLanguage.LanguageTag };

// Get the currently used system date format

mDateFormatInfo = CultureInfo.CurrentCulture.DateTimeFormat;

// This should match the uap:AppService and VoiceCommandService references from the

// package manifest and VCD files, respectively. Make sure we've been launched by

// a Cortana Voice Command.

if ((triggerDetails != null) && (triggerDetails.Name == "DictVoiceCommandService"))

{

try

{

mVoiceServiceConnection = VoiceCommandServiceConnection.FromAppServiceTriggerDetails(triggerDetails);

mVoiceServiceConnection.VoiceCommandCompleted += OnVoiceCommandCompleted;

VoiceCommand voiceCommand = await mVoiceServiceConnection.GetVoiceCommandAsync();

//var properties = voiceCommand.SpeechRecognitionResult.SemanticInterpretation.Properties.Values.First()[0];

// Depending on the operation (defined in AdventureWorks:AdventureWorksCommands.xml)

// perform the appropriate command.

switch (voiceCommand.CommandName)

{

case "searchWord":

case "translate":

var keyword = voiceCommand.Properties["query"][0];

await SendCompletionMessageForKeyword(keyword);

break;

}

}

catch (Exception ex)

{

System.Diagnostics.Debug.WriteLine("Handling Voice Command failed " + ex.ToString());

}

}

}

…}

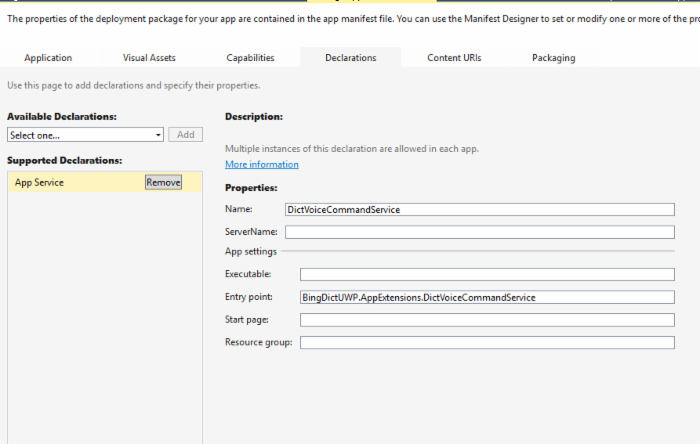

大家需要在Package.appxmanifest里声名App Service并正确填写该service的entry point,如下图:

写在最后

关于Cortana语音指令集,目前还存在一些可以改进的地方,比如语音指令集的语法全靠手写,并没有自然语音理解的能力。如果开发者在使用中还有其它痛点,也欢迎给我们留言一起讨论。说不定这些痛点,下个版本就能解决了呢 :)

![[HBLOG]公众号](http://www.liuhaihua.cn/img/qrcode_gzh.jpg)