Oracle动态采样分析

Oracle动态采样分析

动态采样概念

动态采样(Dynamic Sampling)是在ORACLE 9i Release 2中开始引入的一个技术,引入它的目的是为了应对数据库对象没有分析(统计信息缺失)的情况下,优化器生成更好的执行计划。简单的说,在数据库段(表、索引、分区)对象没有分析的情况下,为了使CBO优化器得到足够多的信息以保证优化器做出正确执行计划而发明的一种技术。它会分析一定数量段对象上的数据块获取CBO需要的统计信息。动态采样技术仅仅是统计信息的一种补充,它不能完全替代统计信息分析。

Dynamic sampling first became available in Oracle9i Database Release 2. It is the ability of the cost-based optimizer (CBO) to sample the tables a query references during a hard parse, to determine better default statistics for unanalyzed segments, and to verify its “guesses.” This sampling takes place only at hard parse time and is used to dynamically generate better statistics for the optimizer to use, hence the name dynamic sampling.

The purpose of dynamic sampling is to improve server performance by determining more accurate estimates for predicate selectivity and statistics for tables and indexes. The statistics for tables and indexes include table block counts, applicable index block counts, table cardinalities, and relevant join column statistics. These more accurate estimates allow the optimizer to produce better performing plans.

动态采样在Oracle 11g之前称为 Dynamic Sampling, ORACLE 12c之后改名为Dynamic Statistic.

动态采样介绍

如果要理解动态采样,最好从鲜活的例子开始,向来理论都是枯燥乏味的。创建一个test表,总共有50319行数据。如下所示

SQL> create table test

2 as 3 select owner, object_type 4 from dba_objects; Table created. SQL> select count(1) from test;

COUNT(1) ----------

50319

我们使用dynamic_sampling(test 0)提示(hints)来禁用动态采样(稍后动态采样级别中介绍),从下面的执行计划可以看出,在表对象没有做分析情况下,如果禁用了动态采样,CBO优化器唯一可以使用的信息为该表存储在数据字典的一些信息,比如多少个extent,多少个block等,这些信息往往不够。此时优化器估计表test的行数为11027(如下所示), 跟实际的表记录行数50319还是有蛮大的偏差。在复杂环境下,就很有可能导致CBO优化器做出错误的执行计划。

SQL> set autotrace traceonly explain;

SQL> select /*+ dynamic_sampling(test 0) */ * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 11027 | 301K| 31 (0)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 11027 | 301K| 31 (0)| 00:00:01 |

--------------------------------------------------------------------------

SQL> set autotrace off;

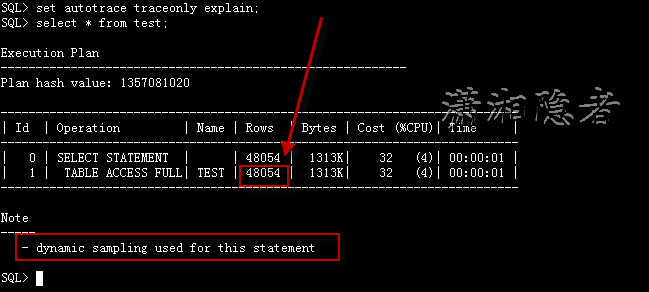

如果启用动态采样(默认情况下,动态采样级别为2),优化器根据动态采样得到一些数据信息猜测、估计表TEST的记录行数为48054,已经接近实际记录行数50319了。比不做动态采样分析要好很多了。当然你不能指望动态采样获取完全准确的信息,因为它只是采样了一些数据块。

SQL> set autotrace traceonly explain;

SQL> select * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 48054 | 1313K| 32 (4)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 48054 | 1313K| 32 (4)| 00:00:01 |

-------------------------------------------------------------------------- Note

----- - dynamic sampling used for this statement SQL> set autotrace off;

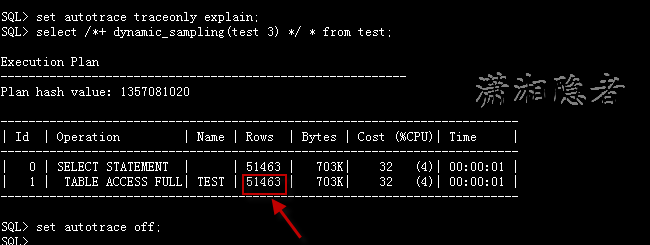

如果我们将动态采样的级别提高为3,如下所示,发现优化器根据动态采样得到的信息比默认(默认情况下,动态采样级别为2)情况获得的信息更准确。优化器估计表TEST的行数为51463,比48054又接近实际情况一步了。

SQL> set autotrace traceonly explain;

SQL> select /*+ dynamic_sampling(test 3) */ * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 51463 | 703K| 32 (4)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 51463 | 703K| 32 (4)| 00:00:01 |

-------------------------------------------------------------------------- SQL> set autotrace off;

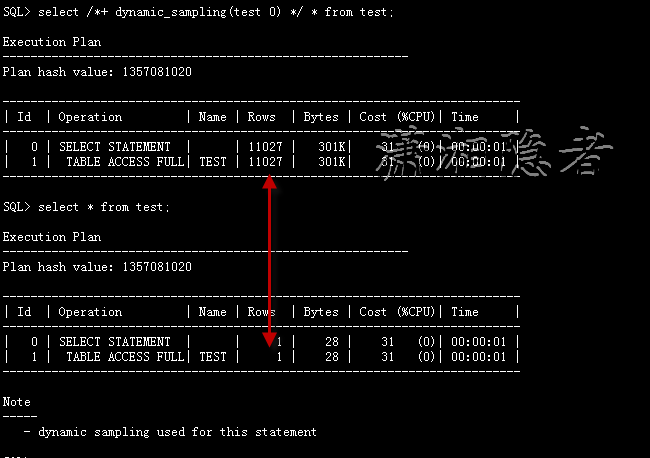

在Tom的这篇文章中提到,在没有动态采样的情况下,如果删除了该表数据,CBO优化器估算的结果集和没有删除之前是一样的。这是因为当一个表的数据被删除后,这个表所分配的extent和block是不会自动回收的(高水位线不变),所以CBO如果没有采样数据块做分析,只是从数据字典中获取extend等信息,就会误认为任然还有那么多数据。

SQL> delete from test;

50319 rows deleted. SQL> commit;

Commit complete. SQL> set autotrace traceonly explain;

SQL> select /*+ dynamic_sampling(test 0) */ * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 11027 | 301K| 31 (0)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 11027 | 301K| 31 (0)| 00:00:01 |

-------------------------------------------------------------------------- SQL> select * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 28 | 31 (0)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 1 | 28 | 31 (0)| 00:00:01 |

-------------------------------------------------------------------------- Note

----- - dynamic sampling used for this statement SQL>

什么时候使用动态采样?

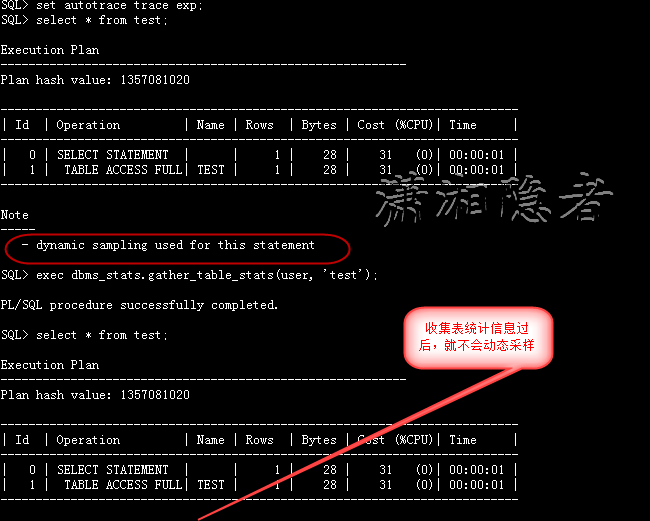

如下所示,我们使用包dbms_stats.gather_table_stats收集表Test的统计信息过后,你会发现“dynamic sampling used for this statement”不见了,其实也就是说优化器发现有表TEST有分析过,它就不会使用动态采样技术。其实开篇的时候已经叙说过“应对数据库对象没有分析(统计信息缺失)的情况下,才会用到动态采样技术“

SQL> set autotrace trace exp;

SQL> select * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 28 | 31 (0)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 1 | 28 | 31 (0)| 00:00:01 |

-------------------------------------------------------------------------- Note

----- - dynamic sampling used for this statement SQL> exec dbms_stats.gather_table_stats(user, 'test');

PL/SQL procedure successfully completed.

SQL> select * from test;

Execution Plan ---------------------------------------------------------- Plan hash value: 1357081020 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 28 | 31 (0)| 00:00:01 | | 1 | TABLE ACCESS FULL| TEST | 1 | 28 | 31 (0)| 00:00:01 |

--------------------------------------------------------------------------

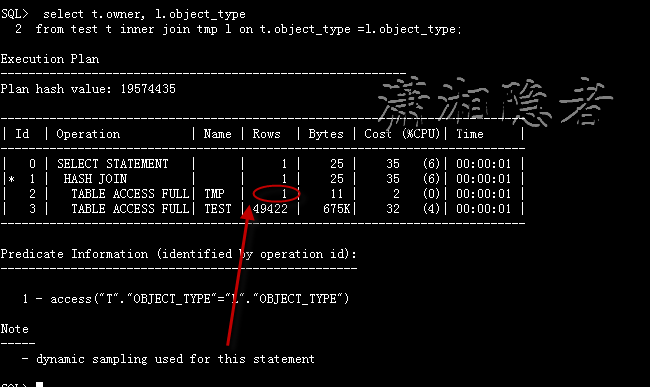

第二种情况:当表TEST即使被分析过,如果查询脚本里面包含临时表,就会使用动态采样技术。因为临时表是不会被分析,它是没有统计信息的。如下所示

SQL> drop table test;

SQL> create table test

2 as 3 select owner, object_type 4 from dba_objects; Table created. SQL> exec dbms_stats.gather_table_stats(user, 'test');

PL/SQL procedure successfully completed.

SQL> create global temporary table tmp

2 (object_type varchar2(19));

Table created. SQL> insert into tmp

2 select distinct object_type from dba_objects;

41 rows created. SQL> commit;

Commit complete. SQL> set autotrace traceonly explain;

SQL> select t.owner, l.object_type

2 from test t inner join tmp l on t.object_type =l.object_type;

Execution Plan ---------------------------------------------------------- Plan hash value: 19574435 --------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | --------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 25 | 35 (6)| 00:00:01 | |* 1 | HASH JOIN | | 1 | 25 | 35 (6)| 00:00:01 | | 2 | TABLE ACCESS FULL| TMP | 1 | 11 | 2 (0)| 00:00:01 |

| 3 | TABLE ACCESS FULL| TEST | 49422 | 675K| 32 (4)| 00:00:01 |

--------------------------------------------------------------------------- Predicate Information (identified by operation id):

--------------------------------------------------- 1 - access("T"."OBJECT_TYPE"="L"."OBJECT_TYPE") Note

----- - dynamic sampling used for this statement SQL>

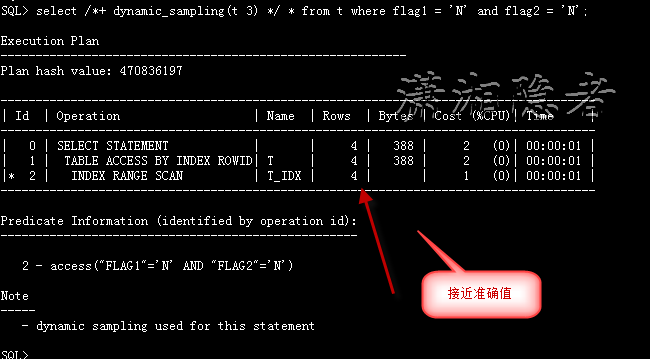

动态采样还有一个独特能力,可以对不同列之间的相关性做统计。表统计信息都是相对独立的。当查询涉及列之间的相关性时,统计信息就显得有些不足了,请看Tom的例子

创建一个特殊的表t,然后对字段flag1、flag2创建索引t_idx,然后分析收集统计信息

SQL> create table t

2 as select decode(mod(rownum,2),0,'N', 'Y') flag1,

3 decode(mod(rownum,2),0,'Y', 'N') flag2, a.*

4 from all_objects a; Table created. SQL> create index t_idx on t(flag1, flag2);

Index created. SQL> begin

2 dbms_stats.gather_table_stats(user, 'T',

3 method_opt =>'for all indexed columns size 254'); 4 end; 5 /

PL/SQL procedure successfully completed.

关于表t的行数情况如下所示,大家先不要纠结为什么查询获取NUM_ROWS数据

SQL> select num_rows, num_rows/2, num_rows/2/2

2 from user_tables 3 where table_name='T';

NUM_ROWS NUM_ROWS/2 NUM_ROWS/2/2

---------- ---------- ------------ 49875 24937.5 12468.75

首先看看对flag1过滤条件的SQL语句,CBO优化器猜测、估计的行数24757, 相当接近24937.5记录数了。

SQL> set autotrace traceonly explain;

SQL> select * from t where flag1='N';

Execution Plan ---------------------------------------------------------- Plan hash value: 1601196873 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 24757 | 2345K| 161 (2)| 00:00:02 | |* 1 | TABLE ACCESS FULL| T | 24757 | 2345K| 161 (2)| 00:00:02 |

-------------------------------------------------------------------------- Predicate Information (identified by operation id):

--------------------------------------------------- 1 - filter("FLAG1"='N') 首先看看对flag2过滤条件的SQL语句,CBO优化器猜测、估计的行数25118, 相当接近24937.5记录数了。

SQL> select * from t where flag2='N';

Execution Plan ---------------------------------------------------------- Plan hash value: 1601196873 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 25118 | 2379K| 161 (2)| 00:00:02 | |* 1 | TABLE ACCESS FULL| T | 25118 | 2379K| 161 (2)| 00:00:02 |

-------------------------------------------------------------------------- Predicate Information (identified by operation id):

--------------------------------------------------- 1 - filter("FLAG2"='N') 如果条件flag1 = 'N' and flag2 = 'N',我们根据逻辑推理判断这样的记录肯定是不存在的,这也是苦心构造这个特例的初衷。下面看看CBO优化器怎么探测、预测的

SQL> select * from t where flag1 = 'N' and flag2 = 'N';

Execution Plan ---------------------------------------------------------- Plan hash value: 1601196873 -------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | -------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 12468 | 1181K| 160 (2)| 00:00:02 | |* 1 | TABLE ACCESS FULL| T | 12468 | 1181K| 160 (2)| 00:00:02 |

-------------------------------------------------------------------------- Predicate Information (identified by operation id):

--------------------------------------------------- 1 - filter("FLAG1"='N' AND "FLAG2"='N') CBO估计的记录数为12468,和实际情况相差非常远。其实是CBO优化器这样估算来的:

flag1=‘N' 的记录数占总数的1/2

flag2= 'N' 的记录数占总数的1/2

根据NUM_ROWS/2/2 =12468.这样显然是不合理的。下面我们通过提升动态采样级别,来看看动态采样是否能避免CBO的错误

SQL> select /*+ dynamic_sampling(t 3) */ * from t where flag1 = 'N' and flag2 = 'N';

Execution Plan ---------------------------------------------------------- Plan hash value: 470836197 ------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time | ------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 4 | 388 | 2 (0)| 00:00:01 | | 1 | TABLE ACCESS BY INDEX ROWID| T | 4 | 388 | 2 (0)| 00:00:01 |

|* 2 | INDEX RANGE SCAN | T_IDX | 4 | | 1 (0)| 00:00:01 | ------------------------------------------------------------------------------------- Predicate Information (identified by operation id):

--------------------------------------------------- 2 - access("FLAG1"='N' AND "FLAG2"='N') Note

----- - dynamic sampling used for this statement SQL>

动态采样级别

ORACLE为动态采样划分了11个级别,详情请见ORACLE 11g官方文档http://download.oracle.com/docs/cd/E11882_01/server.112/e10821/stats.htm#PFGRF94760

Table 13-10 Dynamic Statistics Levels

| Level | When the Optimizer Uses Dynamic Statistics | Sample Size (Blocks) | |

| 0 | Do not use dynamic statistics | 不做动态采样分析 | n/a |

| 1 | Use dynamic statistics for all tables that do not have statistics, but only if the following criteria are met: · There is at least 1 nonpartitioned table in the query that does not have statistics. · This table has no indexes. · This table has more blocks than the number of blocks that would be used for dynamic statistics of this table. | Oracle 对没有分析的表进行动态采样,但需要同时满足以下3个条件。 (1) SQL中至少有一个未分析的表(非分区表) (2) 未分析的表没有索引 (3) 未分析的表占用的数据块要大于动态采样的数据块(32个)

| 32 |

| 2 | Use dynamic statistics if at least one table in the statement has no statistics. This is the default setting. | 对所有的未分析表做分析,动态采样的数据块是默认数据块数为64 | 64 |

| 3 | Use dynamic statistics if any of the following conditions is true: · · The statement meets level 2 criteria. · · The statement has one or more expressions used in the WHERE clause predicates, for example, WHERE SUBSTR(cust_last_name,1,3). | 采样的表包含满足Level 2定义的所有表,同时包括,那些谓词有可能潜在地需要动态采样的表,这些动态采样的数据块为默认数据块,对没有分析的表,动态采样的默认块为默认数据块数量。 | 64 |

| 4 | Use dynamic statistics if any of the following conditions is true: · · The statement meets level 3 criteria. · · The statement uses complex predicates (an OR or AND operator between multiple predicates on the same table). | 采样的表包含满足Level 3定义的表,同时还包括一些表,他们包含一个单表的谓词会引用另外的2个列或者更多的列;采样的块数是动态采样默认数据块数;对没有分析的表,动态采样的数据块为默认数据块的1倍。 | 64 |

| 5 | Use dynamic statistics if the statement meets level 4 criteria. | 采样的表包含满足Level 4定义的表,同时分别使用动态采样默认数据块的2倍的数量来做动态分析。 | 128 |

| 6 | Use dynamic statistics if the statement meets level 4 criteria. | 采样的表包含满足Level 4定义的表,同时分别使用动态采样默认数据块的4倍的数量来做动态分析。 | 256 |

| 7 | Use dynamic statistics if the statement meets level 4 criteria. | 采样的表包含满足Level 4定义的表,同时分别使用动态采样默认数据块的8倍的数量来做动态分析。 | 512 |

| 8 | Use dynamic statistics if the statement meets level 4 criteria. | 采样的表包含满足Level 4定义的表,同时分别使用动态采样默认数据块的32 倍的数量来做动态分析。 | 1024 |

| 9 | Use dynamic statistics if the statement meets level 4 criteria. | 采样的表包含满足Level 4定义的表,同时分别使用动态采样默认数据块的128倍的数量来做动态分析。 | 4086 |

| 10 | Use dynamic statistics if the statement meets level 4 criteria. | 采样的表包含满足Level 4定义的表,同时分别使用动态采样对所有数据块做动态分析。 | All blocks |

| 11 | Use dynamic statistics automatically whenever the optimizer deems it necessary. | 当优化器探测到需要的采样时,对段段对象自动采样 | Automatically determined |

采样级别越高,采样的数据块越多,得到的分析数据就越接近于真实,但同时伴随着资源消耗的开销也增加了。这时一个需要权衡考虑的东西。ORACLE 10 g & 11g的默认采样级别都为2,如下所示,一般使用在会话中使用dynamic_sampling提示来修改动态采样级别。

SQL> show parameter optimizer_dynamic_sampling

NAME TYPE VALUE ------------------------------ ----------- ----------- optimizer_dynamic_sampling integer 2 SQL> 另外一个方式就是通过提示hints里修改动态采样的级别。这个非常灵活、有用。

动态采样注意事项

凡事有利必有弊,动态采样也不是神器。它采样的数据块越多,系统开销就越大,这样会增加SQL硬解析的时间,如果是数据库仓库(DW、OLAP)环境,SQL执行时间相当长,硬解析时间只占整个SQL执行时间的一小部分,那么可以适当的提高动态采样级别,这样是有利于优化器获取更加正确的信息。一般设置为3或4比较合适。

但是在并发比较严重的OLTP系统中,每秒中有成千上万的SQL语句执行,它要求SQL语句短小、执行时间短,所以在OLTP系统中应该减低动态采样级别或不用动态采样。可以参考下面Tom的原文:

When should I use dynamic sampling?” is a tricky question. As with any other feature, there are times to use it and times to avoid it. So far I’ve concentrated on the “goodness” of dynamic sampling, and based on that, it seems that you should set the level to 3 or 4 and just let the optimizer always use dynamic sampling to validate its guesses.

That makes sense in an environment in which you spend most of your time executing SQL and very little of your overall time hard-parsing the SQL. That is, the SQL you are executing runs for a long time and the parse time is a small portion of the overall execution time, such as in a data warehousing environment. There, dynamic sampling at levels above the default makes complete sense. You are willing to give the optimizer a little more time during a hard parse (when sampling takes place) to arrive at the optimal plan for these complex queries.

That leaves the other classic type of environment: the online transaction processing (OLTP) system. Here, in general, you are executing queries thousands of times per second and spend very little time executing a given query—the queries are typically small and fast. Increasing the parse time in an OLTP system might well cause you to spend more time parsing than executing SQL. You do not want to increase the parse times here, so higher levels of dynamic sampling would not be advisable

参考资料:

http://www.oracle.com/technetwork/issue-archive/2009/09-jan/o19asktom-086775.html

https://blogs.oracle.com/optimizer/entry/dynamic_sampling_and_its_impact_on_the_optimizer

[让Oracle跑得更快]---谭怀远

http://blog.csdn.net/tianlesoftware/article/details/5845028

之前在说Oracle Optimizer中的CBO时讲到,当表没有做分析的时候,Oracle 会使用动态采样来收集统计信息。 获取准确的段对象(表,表分区,索引等)的分析数据,是CBO存在的基石,CBO的机制就是收集尽可能多的对象信息和系统信息,通过对这些信息进行计算,分析,评估,最终得出一个成本最低的执行计划。 所以对于CBO,数据段的分析就非常重要。

Oracle Optimizer CBO RBO

http://blog.csdn.net/tianlesoftware/archive/2010/08/19/5824886.aspx

一. 先演示一个示例,来理解分析的作用

1.1创建表

SQL> create table t as select object_id,object_name from dba_objects where 1=2;

表已创建。

SQL> create index index_t on t(object_id);

索引已创建。

SQL> insert into t select object_id,object_name from dba_objects;

已创建72926行。

SQL> commit;

提交完成。

1.2查看分的分析及执行计划

SQL> select num_rows,avg_row_len,blocks,last_analyzed from user_tables where table_name='T';

NUM_ROWS AVG_ROW_LEN BLOCKS LAST_ANALYZED

---------- ----------- ---------- --------------

SQL> select blevel,leaf_blocks,distinct_keys,last_analyzed from user_indexes where table_name='T';

BLEVEL LEAF_BLOCKS DISTINCT_KEYS LAST_ANALYZED

---------- ----------- ------------- --------------

0 0 0 25-8月 -10

从查询结果看出,表的行数,行长,占用的数据块数及最后的分析时间都是空。 索引的相关信息也没有,说明这个表和说因都没有被分析,如果此时有一条SQL 对表做查询,CBO 由于无法获取这些信息,很可能生成错误的执行计划,如:

SQL> set linesize 200

SQL> set autot trace exp;

SQL> select /*+dynamic_sampling(t 0) */ * from t where object_id>30;

执行计划

----------------------------------------------------------

Plan hash value: 80339723

---------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 316 | 0 (0)| 00:00:01 |

| 1 | TABLE ACCESS BY INDEX ROWID| T | 4 | 316 | 0 (0)| 00:00:01 |

|* 2 | INDEX RANGE SCAN | INDEX_T | 1 | | 0 (0)| 00:00:01 |

---------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("OBJECT_ID">30)

SQL>

在Oracle 10g以后,如果一个表没有做分析,数据库将自动对它做动态采样分析,所以这里采用hint的方式将动态采样的级别设置为0,即不使用动态采样。

从这个执行计划,看书CBO 估计出表中满足条件的记录为4条,索引使用了索引。 我们对表做一下分析,用结果比较一下。

1.3 分析表及查看分析之后的执行计划

分析可以通过两中方式:

一种是analyze 命令,如:

analyze table tablename compute statistics for all indexes;

还有一种就是通过DBMS_STATS包来分析,从9i 开始,Oracle 推荐使用DBMS_STATS包对表进行分析操作,因为DBMS_STATS 提供了更多的功能,以及灵活的操作方式。

SQL> exec dbms_stats.gather_table_stats('SYS','T');

PL/SQL 过程已成功完成。

SQL> select blevel,leaf_blocks,distinct_keys,last_analyzed from user_indexes where table_name='T';

BLEVEL LEAF_BLOCKS DISTINCT_KEYS LAST_ANALYZED

---------- ----------- ------------- --------------

1 263 72926 25-8月 -10

SQL> select num_rows,avg_row_len,blocks,last_analyzed from user_tables where table_name='T';

NUM_ROWS AVG_ROW_LEN BLOCKS LAST_ANALYZED

---------- ----------- ---------- --------------

72926 29 345 25-8月 -10

从上面的结果,可以看出DBMS_STATS.gather_table_stats已经对表和索引都做了分析。 现在我们在来看一下执行计划。

SQL> set autot trace exp;

SQL> select * from t where object_id>30;

执行计划

----------------------------------------------------------

Plan hash value: 1601196873

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 72899 | 2064K| 96 (2)| 00:00:02 |

|* 1 | TABLE ACCESS FULL| T | 72899 | 2064K| 96 (2)| 00:00:02 |

--------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter("OBJECT_ID">30)

从这个计划,我们看出CBO 估算出的结果是72899 条记录,与实际的72926很近。 此时选择全表扫描更优。 通过这个例子,我们也看出了分析对执行计划的重要性。

二. 直方图(Histogram)

DBMS_STATS 包对段表的分析有三个层次:

(1)表自身的分析: 包括表中的行数,数据块数,行长等信息。

(2)列的分析:包括列值的重复数,列上的空值,数据在列上的分布情况。

(3)索引的分析: 包括索引叶块的数量,索引的深度,索引的聚合因子等。

直方图就是 列分析中 数据在列上的分布情况。

当Oracle 做直方图分析时,会将要分析的列上的数据分成很多数量相同的部分,每一部分称为一个bucket,这样CBO就可以非常容易地知道这个列上的数的分布情况,这种数据的分布将作为一个非常重要的因素纳入到执行计划成本的计算当中。

对于数据分布非常倾斜的表,做直方图是非常有用的。 如: 1,10,20,30,40,50. 那么在一个数值范围(bucket)内,它的数据记录基本上一样。 如果是:1,5,5,5,5,10,10,20,50,100. 那么它在bucket内,数据分布就是严重的倾斜。

直方图有时对于CBO非常重要,特别是对于有字段数据非常倾斜的表,做直方图分析尤为重要。 可以用dbms_stats包来分析。 默认情况下,dbms_stats 包会对所有的列做直方图分析。 如:

SQL> exec dbms_stats.gather_table_stats('SYS','T',cascade=>true);

PL/SQL 过程已成功完成。

然后从user_histograms视图上查看到相关的信息:

SQL> select table_name,column_name,endpoint_number,endpoint_value from user_histograms where table_name='T';

TABLE_NAME COLUMN_NAME ENDPOINT_NUMBER ENDPOINT_VALUE

------------------------------ -------------------- --------------- --------------

T OBJECT_ID 0 2

T OBJECT_NAME 0 2.4504E+35

T OBJECT_ID 1 76685

T OBJECT_NAME 1 1.0886E+36

如果一个列上的数据有比较严重的倾斜,对这个列做直方图是必要的,但是,Oracle 对数据分析是需要消耗资源的,特别是对于一些很大的段对象,分析的时间尤其长。对于OLAP系统,可能需要几个小时才能完成。

所以做不做分析就需要DBA 权衡好了。 但有一点要注意, 不要在生产环境中随便修改分析方案,除非你有十足的把握。 否则可能导致非常严重的后果。

三. DBMS_STATS包

DBMS_STAS包不仅能够对表进行分析,它还可以对数据库分析进行管理。 按照功能可以分一下几类:

(1) 性能数据的收集

(2) 性能数据的设置

(3) 性能数据的删除

(4) 性能数据的备份和恢

更多信息参考Oracle 联机文档:

11g DBMS_STATS

http://download.oracle.com/docs/cd/E11882_01/appdev.112/e10577/d_stats.htm#ARPLS68486

10g DBMS_STATS

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.1 DBMS_STATS包的几个常用功能:性能的手机,设定 和删除

性能数据的收集包含这样几个存储过程:

GATHER_DATABASE_STATS Procedures

GATHER_DICTIONARY_STATS Procedure

GATHER_FIXED_OBJECTS_STATS Procedure

GATHER_INDEX_STATS Procedure

GATHER_SCHEMA_STATS Procedures

GATHER_SYSTEM_STATS Procedure

GATHER_TABLE_STATS Procedure

从名字也可以看出各自的作用,这些存储过程用来收集数据库不同级别对象的性能数据,包括:数据库,数据字典,表,索引,SCHEMA的性能等。

3.1.1 GATHER_TABLE_STATS Procedure 存储过程

在10g中, GATHER_TABLE_STATS的参数如下:

DBMS_STATS.GATHER_TABLE_STATS (

ownname VARCHAR2,

tabname VARCHAR2,

partname VARCHAR2 DEFAULT NULL,

estimate_percent NUMBER DEFAULT to_estimate_percent_type

(get_param('ESTIMATE_PERCENT')),

block_sample BOOLEAN DEFAULT FALSE,

method_opt VARCHAR2 DEFAULT get_param('METHOD_OPT'),

degree NUMBER DEFAULT to_degree_type(get_param('DEGREE')),

granularity VARCHAR2 DEFAULT GET_PARAM('GRANULARITY'),

cascade BOOLEAN DEFAULT to_cascade_type(get_param('CASCADE')),

stattab VARCHAR2 DEFAULT NULL,

statid VARCHAR2 DEFAULT NULL,

statown VARCHAR2 DEFAULT NULL,

no_invalidate BOOLEAN DEFAULT to_no_invalidate_type (

get_param('NO_INVALIDATE')),

force BOOLEAN DEFAULT FALSE);

到了11g,对参数做了调整:

DBMS_STATS.GATHER_TABLE_STATS (

ownname VARCHAR2,

tabname VARCHAR2,

partname VARCHAR2 DEFAULT NULL,

estimate_percent NUMBER DEFAULT to_estimate_percent_type

(get_param('ESTIMATE_PERCENT')),

block_sample BOOLEAN DEFAULT FALSE,

method_opt VARCHAR2 DEFAULT get_param('METHOD_OPT'),

degree NUMBER DEFAULT to_degree_type(get_param('DEGREE')),

granularity VARCHAR2 DEFAULT GET_PARAM('GRANULARITY'),

cascade BOOLEAN DEFAULT to_cascade_type(get_param('CASCADE')),

stattab VARCHAR2 DEFAULT NULL,

statid VARCHAR2 DEFAULT NULL,

statown VARCHAR2 DEFAULT NULL,

no_invalidate BOOLEAN DEFAULT to_no_invalidate_type (

get_param('NO_INVALIDATE')),

force BOOLEAN DEFAULT FALSE);

对参数的说明:

| Parameter | Description |

| ownname | Schema of table to analyze |

| tabname | Name of table |

| partname | Name of partition |

| estimate_percent | Percentage of rows to estimate (NULL means compute) The valid range is [0.000001,100]. Use the constant DBMS_STATS.AUTO_SAMPLE_SIZE to have Oracle determine the appropriate sample size for good statistics. This is the default.The default value can be changed using the SET_PARAM Procedure. |

| block_sample | Whether or not to use random block sampling instead of random row sampling. Random block sampling is more efficient, but if the data is not randomly distributed on disk, then the sample values may be somewhat correlated. Only pertinent when doing an estimate statistics. |

| method_opt | Accepts: FOR ALL [INDEXED | HIDDEN] COLUMNS [size_clause] FOR COLUMNS [size clause] column|attribute [size_clause] [,column|attribute [size_clause]...] size_clause is defined as size_clause := SIZE {integer | REPEAT | AUTO | SKEWONLY} The default is FOR ALL COLUMNS SIZE AUTO.The default value can be changed using theSET_PARAM Procedure. |

| degree | Degree of parallelism. The default for degree is NULL. The default value can be changed using the SET_PARAM Procedure NULL means use the table default value specified by the DEGREE clause in the CREATE TABLE or ALTER TABLE statement. Use the constant DBMS_STATS.DEFAULT_DEGREE to specify the default value based on the initialization parameters. The AUTO_DEGREE value determines the degree of parallelism automatically. This is either 1 (serial execution) or DEFAULT_DEGREE (the system default value based on number of CPUs and initialization parameters) according to size of the object. |

| granularity | Granularity of statistics to collect (only pertinent if the table is partitioned). 'ALL' - gathers all (subpartition, partition, and global) statistics 'AUTO'- determines the granularity based on the partitioning type. This is the default value. 'DEFAULT' - gathers global and partition-level statistics. This option is obsolete, and while currently supported, it is included in the documentation for legacy reasons only. You should use the 'GLOBAL AND PARTITION' for this functionality. Note that the default value is now 'AUTO'. 'GLOBAL' - gathers global statistics 'GLOBAL AND PARTITION' - gathers the global and partition level statistics. No subpartition level statistics are gathered even if it is a composite partitioned object. 'PARTITION '- gathers partition-level statistics 'SUBPARTITION' - gathers subpartition-level statistics. |

| cascade | Gather statistics on the indexes for this table. Index statistics gathering is not parallelized. Using this option is equivalent to running the GATHER_INDEX_STATS Procedure on each of the table's indexes. Use the constant DBMS_STATS.AUTO_CASCADE to have Oracle determine whether index statistics to be collected or not. This is the default. The default value can be changed using theSET_PARAM Procedure. |

| stattab | User statistics table identifier describing where to save the current statistics |

| statid | Identifier (optional) to associate with these statistics within stattab |

| statown | Schema containing stattab (if different than ownname) |

| no_invalidate | Does not invalidate the dependent cursors if set to TRUE. The procedure invalidates the dependent cursors immediately if set to FALSE. Use DBMS_STATS.AUTO_INVALIDATE. to have Oracle decide when to invalidate dependent cursors. This is the default. The default can be changed using the SET_PARAM Procedure. |

| force | Gather statistics of table even if it is locked |

在gather_table_stats 存储过程的所有参数中,除了ownname和tabname,其他的参数都有默认值。 所以我们在调用这个存储过程时,Oracle 会使用参数的默认值对表进行分析。如:

SQL> exec dbms_stats.gather_table_STATS('SYS','T');

PL/SQL 过程已成功完成。

如果想查看当前的默认值,可以使用dbms_stats.get_param函数来获取:

SQL> select dbms_stats.get_param('method_opt') from dual;

DBMS_STATS.GET_PARAM('METHOD_OPT')

------------------------------------------------------------

FOR ALL COLUMNS SIZE AUTO

结合上面对参数的说明:

- AUTO : Oracle determines the columns to collect histograms based on data distribution and the workload of the columns.

我们可以看出,就是对所有的列做直方图分析,直方图设置的bucket值由Oracle自己决定。

3.1.1.1 estimate_percent 参数

这个参数是一个百分比值,它告诉分析包需要使用表中数据的多大比例来做分析。

理论上来讲,采样的数据越多,得到的信息就越接近于实际,CBO做出的执行计划就越优化,但是,采样越多,消耗的系统资源必然越多。 对系统的影响也越大。 所以对于这个值的设置,要根据业务情况来。 如果数据的直方图分布比较均匀,就可以使用默认值:AUTO_SAMPLE_SIZE,即让Oracle 自己来判断采样的比例。有时,特别是对于批量加载的表,我们可以预估表中的数据量,可以人工地设置一个合理的值。 一般,对于一个有1000万数据的表分区,可以把这个参数设置为0.000001.

3.1. 1.2 Method_option 参数

这个参数用来定义直方图分析的一些值。

FOR ALL [INDEXED | HIDDEN] COLUMNS [size_clause]

FOR COLUMNS [size clause] column|attribute [size_clause] [,column|attribute [size_clause]...]

这里给出了4种指定哪些列进行分析的方式:

(1) 所有列:for all column

(2) 索引列:只对有索引的列进行分析,for all indexed columns

(3) 影藏列:只对影藏的列进行分析,for all hidden columns

(4) 显示指定列:显示的指定那些列进行分析,for columns columns_name

该参数默认值:for all columns size auto.

3.1. 1.3 degree 参数

用来指定分析时使用的并行度。 有以下这些设置:

(1) Null: 如果设置为null,Oracle 将使用被分析表属性的并行度,比如表在创建时指定的并行度,或者后者使用alter table 重新设置的并行度。

(2) 一个数值: 可以显示地指定分析时使用的并行度。

(3) Default_degree: 如果设置为default,Oracle 将根据初始化参数中相关参数的设置来决定使用的并行度。

这个参数的默认值是Null,即通过表上的并行度属性来决定分析使用的并行度。 当需要分析的表或表分区非常大,并且系统资源比较充分的时候,就可以考虑使用并行的方式来做分析,这样就会大大提高分析的速度。 相反,如果你的系统资源比较吃紧,那么启用并行可能会适得其反。

3.1. 1.4 Granularity

分析的粒度,有以下几个配置:

(1) ALL : 将会对表的全局(global),分区,子分区的数据都做分析

(2) AUTO: Oracle 根据分区的类型,自动决定做哪一种粒度的分析。

(3) GLOBAL:只做全局级别的分析。

(4) GLOBAL AND PARTITION: 只对全局和分区级别做分析,对子分区不做分析,这是和ALL的一个区别。

(5) PARTITION: 只在分区级别做分析。

(6) SUBPARTITION: 只在子分区做分析。

在生产环境中,特别是OLAP 或者数据仓库的环境中,这个参数的设置会直接影响到CBO的执行计划选择。

在OLAP或者数据仓库系统中,经常有这样的事情,新创建一个分区,将批量的数据(通常是很大的数据)加载到分区中,对分区做分析,然后做报表或者数据挖掘。 在理想的情况下,对表的全局,分区都做分析,这样才能得到最充足的数据,但是通常这样的表都非常大,如果每增加一个分区都需要做一次全局分析,那么会消耗极大的系统资源。 但是如果只对新加入的分区进行分区而不做全局分析,oracle 在全局范围内的信息就会不准确。

该参数在默认情况下,DBMS_STATS 包会对表级(全局),分区级(对应参数partition)都会进行分析。 如果把cascade 设置为true,相应索引的全局和分区级别也都会被分析。 如果只对分区级进行分析,而全局没有分析,那么全局信息没有更新,依然会导致CBO 作出错误的执行计划。

所以当一些新的数据插入到表中时,如果对这些新的数据进行分析,是一个非常重要的问题。 一般参考如下原则:

(1) 看一下新插入的数据在全表中所占的比例,如果所占比例不是很大,那么可以考虑不做全局分析,否则就需要考虑,一句是业务的实际运行情况。

(2) 采样比例。 如果载入的数据量非常大,比如上千万或者更大,就要把采样比例压缩的尽可能地小,但底线是不能影响CBO做出正确的执行计划,采样比例的上线是不能消耗太多的资源而影响到业务的正常运行。

(3) 新加载的数据应该要做分区级的数据分析。 至于是否需要直方图分析,以及设置多少个buckets(size参数指定),需要DBA一句数据的分布情况进行考虑,关键是视数据的倾斜程度而定。

3.1.2 GATHER_SCHEMA_STATS 存储过程

这个存储过程用于对某个用户下所有的对象进行分析。如果你的数据用户对象非常多,单独对每个对象进行分析设定会非常不方便,这个存储过程就很方便。 它的好处在于如果需要分析的对象非常多,将可以大大降低DBA的工作量,不足之处是所有分析使用相同的分析策略,可能会导致分析不是最优。 所以要根据实际情况来决定。

该存储过程参数如下:

DBMS_STATS.GATHER_SCHEMA_STATS (

ownname VARCHAR2,

estimate_percent NUMBER DEFAULT to_estimate_percent_type

(get_param('ESTIMATE_PERCENT')),

block_sample BOOLEAN DEFAULT FALSE,

method_opt VARCHAR2 DEFAULT get_param('METHOD_OPT'),

degree NUMBER DEFAULT to_degree_type(get_param('DEGREE')),

granularity VARCHAR2 DEFAULT GET_PARAM('GRANULARITY'),

cascade BOOLEAN DEFAULT to_cascade_type(get_param('CASCADE')),

stattab VARCHAR2 DEFAULT NULL,

statid VARCHAR2 DEFAULT NULL,

options VARCHAR2 DEFAULT 'GATHER',

objlist OUT ObjectTab,

statown VARCHAR2 DEFAULT NULL,

no_invalidate BOOLEAN DEFAULT to_no_invalidate_type (

get_param('NO_INVALIDATE')),

force BOOLEAN DEFAULT FALSE,

obj_filter_list ObjectTab DEFAULT NULL);

参数说明如下:

| Parameter | Description |

| ownname | Schema to analyze (NULL means current schema) |

| estimate_percent | Percentage of rows to estimate (NULL means compute): The valid range is [0.000001,100]. Use the constant DBMS_STATS.AUTO_SAMPLE_SIZE to have Oracle determine the appropriate sample size for good statistics. This is the default.The default value can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure, SET_SCHEMA_PREFS Procedure andSET_TABLE_PREFS Procedure. |

| block_sample | Whether or not to use random block sampling instead of random row sampling. Random block sampling is more efficient, but if the data is not randomly distributed on disk, then the sample values may be somewhat correlated. Only pertinent when doing an estimate statistics. |

| method_opt | Accepts: FOR ALL [INDEXED | HIDDEN] COLUMNS [size_clause] size_clause is defined as size_clause := SIZE {integer | REPEAT | AUTO |SKEWONLY} The default is FOR ALL COLUMNS SIZE AUTO.The default value can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure,SET_SCHEMA_PREFS Procedure and SET_TABLE_PREFS Procedure. |

| degree | Degree of parallelism. The default for degree is NULL. The default value can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure, SET_SCHEMA_PREFS Procedure and SET_TABLE_PREFS Procedure. NULLmeans use the table default value specified by the DEGREE clause in theCREATE TABLE or ALTER TABLE statement. Use the constantDBMS_STATS.DEFAULT_DEGREE to specify the default value based on the initialization parameters.The AUTO_DEGREE value determines the degree of parallelism automatically. This is either 1 (serial execution) or DEFAULT_DEGREE (the system default value based on number of CPUs and initialization parameters) according to size of the object. |

| granularity | Granularity of statistics to collect (only pertinent if the table is partitioned). 'ALL' - gathers all (subpartition, partition, and global) statistics 'AUTO'- determines the granularity based on the partitioning type. This is the default value. 'DEFAULT' - gathers global and partition-level statistics. This option is obsolete, and while currently supported, it is included in the documentation for legacy reasons only. You should use the 'GLOBAL AND PARTITION' for this functionality. Note that the default value is now 'AUTO'. 'GLOBAL' - gathers global statistics 'GLOBAL AND PARTITION' - gathers the global and partition level statistics. No subpartition level statistics are gathered even if it is a composite partitioned object. 'PARTITION '- gathers partition-level statistics 'SUBPARTITION' - gathers subpartition-level statistics. |

| cascade | Gather statistics on the indexes as well. Using this option is equivalent to running the GATHER_INDEX_STATS Procedure on each of the indexes in the schema in addition to gathering table and column statistics. Use the constant DBMS_STATS.AUTO_CASCADE to have Oracle determine whether index statistics to be collected or not. This is the default. The default value can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure, SET_SCHEMA_PREFS Procedure and SET_TABLE_PREFS Procedure. |

| stattab | User statistics table identifier describing where to save the current statistics |

| statid | Identifier (optional) to associate with these statistics within stattab |

| options | Further specification of which objects to gather statistics for: GATHER: Gathers statistics on all objects in the schema. GATHER AUTO: Gathers all necessary statistics automatically. Oracle implicitly determines which objects need new statistics, and determines how to gather those statistics. When GATHER AUTO is specified, the only additional valid parameters are ownname, stattab, statid, objlist andstatown; all other parameter settings are ignored. Returns a list of processed objects. GATHER STALE: Gathers statistics on stale objects as determined by looking at the *_tab_modifications views. Also, return a list of objects found to be stale. GATHER EMPTY: Gathers statistics on objects which currently have no statistics. also, return a list of objects found to have no statistics. LIST AUTO: Returns a list of objects to be processed with GATHER AUTO. LIST STALE: Returns list of stale objects as determined by looking at the *_tab_modifications views. LIST EMPTY: Returns list of objects which currently have no statistics. |

| objlist | List of objects found to be stale or empty |

| statown | Schema containing stattab (if different than ownname) |

| no_invalidate | Does not invalidate the dependent cursors if set to TRUE. The procedure invalidates the dependent cursors immediately if set to FALSE. UseDBMS_STATS.AUTO_INVALIDATE. to have Oracle decide when to invalidate dependent cursors. This is the default. The default can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure,SET_SCHEMA_PREFS Procedure and SET_TABLE_PREFS Procedure. |

| force | Gather statistics on objects even if they are locked |

| obj_filter_list | A list of object filters. When provided, GATHER_SCHEMA_STATS will gather statistics only on objects which satisfy at least one object filter in the list as needed. In a single object filter, we can specify the constraints on the object attributes. The attribute values specified in the object filter are case- insensitive unless double-quoted. Wildcard is allowed in the attribute values. Suppose non-NULL values s1, s2, ... are specified for attributes a1, a2, ... in one object filter. An object o is said to satisfy this object filter if (o.a1 like s1) and (o.a2 like s2) and ... is true. See Applying an Object Filter List. |

3.1.3 DBMS_STATS.GATHER_INDEX_STATS 存储过程

该存储过程用于对索引的分析,如果我们在使用DBMS_STATS.GATHER_TABLES_STATS的分析时设置参数cascade=>true。 那么Oracle会同时执行这个存储过程来对索引进行分析。

存储过程参数:

DBMS_STATS.GATHER_INDEX_STATS (

ownname VARCHAR2,

indname VARCHAR2,

partname VARCHAR2 DEFAULT NULL,

estimate_percent NUMBER DEFAULT to_estimate_percent_type

(GET_PARAM('ESTIMATE_PERCENT')),

stattab VARCHAR2 DEFAULT NULL,

statid VARCHAR2 DEFAULT NULL,

statown VARCHAR2 DEFAULT NULL,

degree NUMBER DEFAULT to_degree_type(get_param('DEGREE')),

granularity VARCHAR2 DEFAULT GET_PARAM('GRANULARITY'),

no_invalidate BOOLEAN DEFAULT to_no_invalidate_type

(GET_PARAM('NO_INVALIDATE')),

force BOOLEAN DEFAULT FALSE);

| Parameter | Description |

| ownname | Schema of index to analyze |

| indname | Name of index |

| partname | Name of partition |

| estimate_percent | Percentage of rows to estimate (NULL means compute). The valid range is[0.000001,100]. Use the constant DBMS_STATS.AUTO_SAMPLE_SIZE to have Oracle determine the appropriate sample size for good statistics. This is the default.The default value can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure, SET_SCHEMA_PREFS Procedure andSET_TABLE_PREFS Procedure. |

| stattab | User statistics table identifier describing where to save the current statistics |

| statid | Identifier (optional) to associate with these statistics within stattab |

| statown | Schema containing stattab (if different than ownname) |

| degree | Degree of parallelism. The default for degree is NULL. The default value can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure, SET_SCHEMA_PREFS Procedure and SET_TABLE_PREFS Procedure. NULLmeans use of table default value that was specified by the DEGREE clause in the CREATE/ALTER INDEX statement. Use the constantDBMS_STATS.DEFAULT_DEGREE for the default value based on the initialization parameters. The AUTO_DEGREE value determines the degree of parallelism automatically. This is either 1 (serial execution) orDEFAULT_DEGREE (the system default value based on number of CPUs and initialization parameters) according to size of the object. |

| granularity | Granularity of statistics to collect (only pertinent if the table is partitioned). 'ALL' - gathers all (subpartition, partition, and global) statistics 'AUTO'- determines the granularity based on the partitioning type. This is the default value. 'DEFAULT' - gathers global and partition-level statistics. This option is obsolete, and while currently supported, it is included in the documentation for legacy reasons only. You should use the 'GLOBAL AND PARTITION' for this functionality. Note that the default value is now 'AUTO'. 'GLOBAL' - gathers global statistics 'GLOBAL AND PARTITION' - gathers the global and partition level statistics. No subpartition level statistics are gathered even if it is a composite partitioned object. 'PARTITION '- gathers partition-level statistics 'SUBPARTITION' - gathers subpartition-level statistics. |

| no_invalidate | Does not invalidate the dependent cursors if set to TRUE. The procedure invalidates the dependent cursors immediately if set to FALSE. UseDBMS_STATS.AUTO_INVALIDATE. to have Oracle decide when to invalidate dependent cursors. This is the default. The default can be changed using the SET_DATABASE_PREFS Procedure, SET_GLOBAL_PREFS Procedure,SET_SCHEMA_PREFS Procedure and SET_TABLE_PREFS Procedure. |

| force | Gather statistics on object even if it is locked |

上面讨论了三个常用的存储过程。 分析对CBO 来说非常重要,如果不能按照自己的系统指定出切合实际的数据分析方案,可能会导致如下问题的发生:

(1) 分析信息不充分导致CBO 产生错误的执行计划,导致SQL执行效率低下。

(2) 过多的分析工具带来系统性能的严重下降。

3.2 DBMS_STATS包管理功能

3.2.1 获取分析数据

GET_COLUMN_STATS Procedures

GET_INDEX_STATS Procedures

GET_SYSTEM_STATS Procedure

GET_TABLE_STATS Procedure

这四个存储过程分别为用户获取字段,索引,表和系统的统计信息。 它的用法是首先定义要获取性能指标的变量,然后使用存储过程将性能指标的值赋给变量,最后将变量的值输出。 如:

SQL> set serveroutput on

SQL> declare

2 dist number;

3 dens number;

4 ncnt number;

5 orec dbms_stats.statrec;

6 avgc number;

7 begin

8 dbms_stats.get_column_stats('SYS','T','object_ID',distcnt=>dist,density=>dens,nullcnt=>ncnt,srec=>orec,avgclen=>avgc);

9 dbms_output.put_line('the distcnt is:' ||to_char(dist));

10 dbms_output.put_line('the density is:' ||to_char(dens));

11 dbms_output.put_line('the nullcnt is:' ||to_char(ncnt));

12 dbms_output.put_line('the srec is:' ||to_char(ncnt));

13 dbms_output.put_line('the avgclen is:' ||to_char(avgc));

14 end;

15 /

the distcnt is:72926

the density is:.0000137125305103804

the nullcnt is:0

the srec is:0

the avgclen is:5

PL/SQL 过程已成功完成。

更多信息参考:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.2.2 设置分析数据

SET_COLUMN_STATS Procedures

SET_INDEX_STATS Procedures

SET_SYSTEM_STATS Procedure

SET_TABLE_STATS Procedure

这几个存储过程允许我们手工地为字段,索引,表和系统性能数据赋值。 它的一个用处是当相应的指标不准确导致执行计划失败时,可以使用这种方法手工地来为这些性能数据赋值。 在极端情况下,这也不失为一个解决问题的方法。

关于这4个存储过程的绝提用法参考 oracle 联机文档:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.2.3 删除分析数据

DELETE_COLUMN_STATS Procedure

DELETE_DATABASE_STATS Procedure

DELETE_DICTIONARY_STATS Procedure

DELETE_FIXED_OBJECTS_STATS Procedure

DELETE_INDEX_STATS Procedure

DELETE_SCHEMA_STATS Procedure

DELETE_SYSTEM_STATS Procedure

DELETE_TABLE_STATS Procedure

当性能数据出现异常导致CBO判断错误时,为了立刻修正这个错误,删除性能数据也是一种补救的方法,比如删除了表的数据,让CBO重新对表做动态采样分析,得到一个正确的结果。

它可以删除字段,数据库,数据字典,基表,索引,表等级别的性能数据。

具体参考oracle 联机文档:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.2.4 保存分析数据

CREATE_STAT_TABLE Procedure

DROP_STAT_TABLE Procedure

可以用这两个存储过程创建一个表,用于存放性能数据,这样有利于对性能数据的管理,也可以删除这个表。

具体参考oracle 联机文档:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.2.5 导入和导出分析数据

EXPORT_COLUMN_STATS Procedure

EXPORT_DATABASE_STATS Procedure

EXPORT_DICTIONARY_STATS Procedure

EXPORT_FIXED_OBJECTS_STATS Procedure

EXPORT_INDEX_STATS Procedure

EXPORT_SCHEMA_STATS Procedure

EXPORT_SYSTEM_STATS Procedure

EXPORT_TABLE_STATS Procedure

IMPORT_COLUMN_STATS Procedure

IMPORT_DATABASE_STATS Procedure

IMPORT_DICTIONARY_STATS Procedure

IMPORT_FIXED_OBJECTS_STATS Procedure

IMPORT_INDEX_STATS Procedure

IMPORT_SCHEMA_STATS Procedure

IMPORT_SYSTEM_STATS Procedure

IMPORT_TABLE_STATS Procedure

这些存储过程可以将已经有的性能指标导入到用户创建好的表中存放,需要时,可以从表中倒回来。

具体参考oracle 联机文档:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.2.6 锁定分析数据

LOCK_SCHEMA_STATS Procedure

LOCK_TABLE_STATS Procedure

UNLOCK_SCHEMA_STATS Procedure

UNLOCK_TABLE_STATS Procedure

The LOCK_* procedures either freeze the current set of the statistics or to keep the statistics empty (uncollected).When statistics on a table are locked, all the statistics depending on the table, including table statistics, column statistics, histograms and statistics on all dependent indexes, are considered to be locked.

可能在某些时候,我们觉得当前的统计信息非常好,执行计划很准确,并且表中数据几乎不变化,那么可以使用LOCK_TABLE_STATS Procedure 来锁定表的统计信息,不允许对表做分析或者设定分析数据。 当表的分析数据被锁定之后,相关的所有分析数据,包括表级,列级,直方图,索引的分析数据都将被锁定,不允许被更新。

具体参考oracle 联机文档:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

3.2.7 分析数据的恢复

RESET_PARAM_DEFAULTS Procedure

RESTORE_DICTIONARY_STATS Procedure

RESTORE_FIXED_OBJECTS_STATS Procedure

RESTORE_SCHEMA_STATS Procedure

RESTORE_SYSTEM_STATS Procedure

RESTORE_TABLE_STATS Procedure

Whenever statistics in dictionary are modified, old versions of statistics are saved automatically for future restoring. The old statistics are purged automatically at regular intervals based on the statistics history retention setting and the time of recent statistics gathering performed in the system. Retention is configurable using the ALTER_STATS_HISTORY_RETENTION Procedure.

比如我们重新分析了表,发现分析的数据导致了CBO选择了错误的执行计划,为了挽救这种局面,可以将统计信息恢复到从前的那个时间点,也就是CBO执行计划正确的时间点,先解决这个问题,再来分析问题的原因。

具体参考oracle 联机文档:

http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_stats.htm#i1036461

四. 动态采样

4.1 什么是动态采样

动态采样(Dynamic Sampling)技术的最初提出是在Oracle 9i R2,在段(表,索引,分区)没有分析的情况下,为了使CBO 优化器得到足够的信息以保证做出正确的执行计划而发明的一种技术,可以把它看做分析手段的一种补充。

当段对象没有统计信息时(即没有做分析),动态采样技术可以通过直接从需要分析的对象上收集数据块(采样)来获得CBO需要的统计信息。

一个简单的例子:

创建表:

SQL> create table t

2 as

3 select owner,object_type from all_objects;

表已创建。

查看表的记录数:

SQL> select count(*) from t;

COUNT(*)

----------

72236 -- 记录数

这里创建了一张普通表,没有做分析,我们在hint中用0级来限制动态采样,此时CBO 唯一可以使用的信息就是表存储在数据字典中的一些信息,如有多少个extent,有多少个block,但是这些信息是不够的。

SQL> set autot traceonly explain

SQL> select /*+dynamic_sampling(t 0) */ * from t;

执行计划

----------------------------------------------------------

Plan hash value: 1601196873

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 15928 | 435K| 55 (0)| 00:00:01 |

| 1 | TABLE ACCESS FULL| T | 15928 | 435K| 55 (0)| 00:00:01 |

在没有做动态分析的情况下,CBO 估计的记录数是15928条,与真实的72236 相差甚远。

我们用动态分析来查看一下:

SQL> select * from t;

执行计划

----------------------------------------------------------

Plan hash value: 1601196873

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 80232 | 2193K| 56 (2)| 00:00:01 |

| 1 | TABLE ACCESS FULL| T | 80232 | 2193K| 56 (2)| 00:00:01 |

--------------------------------------------------------------------------

Note

-----

- dynamic sampling used for this statement (level=2)

在Oracle 10g中默认对没有分析的段做动态采样,上面的查询结果显示使用了Level 2级的动态采样,CBO 估计的结果是80232 与72236 很接近了。

注意一点:

在没有动态采样的情况下,对于没有分析过的段,CBO也可能错误地将结果判断的程度扩大话。 如:

SQL> delete from t;

已删除72236行。

SQL> commit;

提交完成。

SQL> select /*+dynamic_sampling(t 0) */ * from t;

执行计划

----------------------------------------------------------

Plan hash value: 1601196873

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 15928 | 435K| 55 (0)| 00:00:01 |

| 1 | TABLE ACCESS FULL| T | 15928 | 435K| 55 (0)| 00:00:01 |

--------------------------------------------------------------------------

SQL> select * from t;

执行计划

----------------------------------------------------------

Plan hash value: 1601196873

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 28 | 55 (0)| 00:00:01 |

| 1 | TABLE ACCESS FULL| T | 1 | 28 | 55 (0)| 00:00:01 |

--------------------------------------------------------------------------

Note

-----

- dynamic sampling used for this statement (level=2)

如果细心一点,可能看出2个执行计划的差别。 在没有采用动态分析的情况下,CBO 对t表估计的还是15928行记录,但是用动态分析就显示1条记录。 而表中的数据在查询之前已经删除掉了。 出现这种情况的原因是因为高水位。 虽然表的数据已经删除,但是表分配的extent 和block 没有被回收,所以在这种情况下CBO 依然认为有那么多的数据在那。

通过这一点,我们可以看出,此时CBO能够使用的信息非常有限,也就是这个表有几个extent,有几个block。 但动态采样之后,Oracle 立即发现,原来数据块中都是空的。

关于Oracle 高水位,参考我的blog:Oracle 高水位(HWM)

http://blog.csdn.net/tianlesoftware/archive/2009/10/22/4707900.aspx

动态采样有两方面的作用:

(1) CBO 依赖的是充分的统计分析信息,但是并不是每个用户都会非常认真,及时地去对每个表做分析。 为了保证执行计划都尽可能地正确,Oracle 需要使用动态采样技术来帮助CBO 获取尽可能多的信息。

(2) 全局临时表。 通常来讲,临时表的数据是不做分析的,因为它存放的数据是临时性的,可能很快就释放了,但是当一个查询关联到这样的临时表时,CBO要想获得临时表上的统计信息分析数据,就只能依赖于动态采样了。

动态采样除了可以在段对象没有分析时,给CBO提供分析数据之外,还有一个独特的能力,它可以对不同列之间的相关性做统计。

相对的,表分析的信息是独立的。 如:

(1) 表的行数,平均行长。

(2) 表的每个列的最大值,最小值,重复率,也可能包含直方图。

(3) 索引的聚合因子,索引叶的块数目,索引的高度等。

尽管看到动态采样的优点,但是它的缺点也是显而易见,否则Oracle 一定会一直使用动态采样来取代数据分析:

(1) 采样的数据块有限,对于海量数据的表,结果难免有偏差。

(2) 采样会消耗系统资源,特别是OLTP数据库,尤其不推荐使用动态采样。

4.2 动态采样的级别

Oracle 为动态采样划分了11个级别,在Oracle 的官网上详细的介绍。

13.5.7.4 Dynamic Sampling Levels

http://download.oracle.com/docs/cd/E11882_01/server.112/e10821/stats.htm#PFGRF94760

The sampling levels are as follows if the dynamic sampling level used is from a cursor hint or from theOPTIMIZER_DYNAMIC_SAMPLING initialization parameter:

Level 0: Do not use dynamic sampling.

Level 1: Sample all tables that have not been analyzed if the following criteria are met: (1) there is at least 1 unanalyzed table in the query; (2) this unanalyzed table is joined to another table or appears in a subquery or non-mergeable view; (3) this unanalyzed table has no indexes; (4) this unanalyzed table has more blocks than the number of blocks that would be used for dynamic sampling of this table. The number of blocks sampled is the default number of dynamic sampling blocks (32).

Level 2: Apply dynamic sampling to all unanalyzed tables. The number of blocks sampled is two times the default number of dynamic sampling blocks.

Level 3: Apply dynamic sampling to all tables that meet Level 2 criteria, plus all tables for which standard selectivity estimation used a guess for a predicate that is a potential dynamic sampling predicate. The number of blocks sampled is the default number of dynamic sampling blocks. For unanalyzed tables, the number of blocks sampled is twice the default number of dynamic sampling blocks.

Level 4: Apply dynamic sampling to all tables that meet Level 3 criteria, plus all tables that have single-table predicates that reference 2 or more columns. The number of blocks sampled is the default number of dynamic sampling blocks. For unanalyzed tables, the number of blocks sampled is two times the default number of dynamic sampling blocks.

Levels 5, 6, 7, 8, and 9: Apply dynamic sampling to all tables that meet the previous level criteria using 2, 4, 8, 32, or 128 times the default number of dynamic sampling blocks respectively.

Level 10: Apply dynamic sampling to all tables that meet the Level 9 criteria using all blocks in the table.

The sampling levels are as follows if the dynamic sampling level for a table is set using theDYNAMIC_SAMPLING optimizer hint:

Level 0: Do not use dynamic sampling.

Level 1: The number of blocks sampled is the default number of dynamic sampling blocks (32).

Levels 2, 3, 4, 5, 6, 7, 8, and 9: The number of blocks sampled is 2, 4, 8, 16, 32, 64, 128, or 256 times the default number of dynamic sampling blocks respectively.

Level 10: Read all blocks in the table.

4.2.1 Level 0

不做动态分析

4.2.2 Level 1

Oracle 对没有分析的表进行动态采样,但需要同时满足以下4个条件。

(1) SQL中至少有一个未分析的表

(2) 未分析的表出现在关联查询或者子查询中

(3) 未分析的表没有索引

(4) 未分析的表占用的数据块要大于动态采样默认的数据块(32个)

4.2.3 Level 2

对所有的未分析表做分析,动态采样的数据块是默认数据块的2倍。

4.2.4 Level 3

采样的表包含满足Level 2定义的所有表,同时包括,那些谓词有可能潜在地需要动态采样的表,这些动态采样的数据块为默认数据块,对没有分析的表,动态采样的默认块为默认数据块的2倍。

4.2.5 Level 4

采样的表包含满足Level 3定义的表,同时还包括一些表,他们包含一个单表的谓词会引用另外的2个列或者更多的列;采样的块数是动态采样默认数据块数;对没有分析的表,动态采样的数据块为默认数据块的2倍。

4.2.6 Level 5,6,7,8,9

采样的表包含满足Level 4定义的表,同时分别使用动态采样默认数据块的2,4,8,32,128 倍的数量来做动态分析。

4.2.7 Level 10

采样的表包含满足Level 9定义的所有表,同时对表的所有数据进行动态采样。

采样的数据块越多,得到的分析数据就越接近与真实,但同时伴随着资源消耗的也越大。

4.3 什么时候使用动态采样

动态采样也需要额外的消耗数据库资源,所以,如果 SQL 被反复执行,变量被绑定,硬分析很少,在这样一个环境中,是不宜使用动态采样的,就像OLTP系统。 动态采样发生在硬分析时,如果很少有硬分析发生,动态采样的意义就不大。

而在OLAP或者数据仓库环境下,SQL执行消耗的资源要远远大于SQL解析,那么让解析在消耗多一点资源做一些动态采样分析,从而做出一个最优的执行计划是非常值得的。 实际上在这样的环境中,硬分析消耗的资源几乎是可以忽略的。

所以,一般在OLAP 或者数据仓库环境中,将动态采样的level 设置为3或者4 比较好。 相反,在OLTP系统下,不应该使用动态采样。

About Me

...............................................................................................................................

● 本文整理自网络,http://www.cnblogs.com/kerrycode/p/3854971.html,http://blog.csdn.net/tianlesoftware/article/details/5845028

● 本文在itpub(http://blog.itpub.net/26736162)、博客园(http://www.cnblogs.com/lhrbest)和个人微信公众号(xiaomaimiaolhr)上有同步更新

● 本文itpub地址:http://blog.itpub.net/26736162/abstract/1/

● 本文博客园地址:http://www.cnblogs.com/lhrbest

● 本文pdf版及小麦苗云盘地址:http://blog.itpub.net/26736162/viewspace-1624453/

● 数据库笔试面试题库及解答:http://blog.itpub.net/26736162/viewspace-2134706/

● QQ群:230161599 微信群:私聊

● 联系我请加QQ好友(646634621),注明添加缘由

● 于 2017-05-09 09:00 ~ 2017-05-30 22:00 在魔都完成

● 文章内容来源于小麦苗的学习笔记,部分整理自网络,若有侵权或不当之处还请谅解

● 版权所有,欢迎分享本文,转载请保留出处

...............................................................................................................................

拿起手机使用微信客户端扫描下边的左边图片来关注小麦苗的微信公众号:xiaomaimiaolhr,扫描右边的二维码加入小麦苗的QQ群,学习最实用的数据库技术。

- 本文标签: id tab description IDE HTML list explain http 微信公众号 IO 备份 tar 数据 App value QQ群 ask 神器 图片 rmi UI CEO key 同步 DDL 文章 SQL执行 云 CDN 配置 时间 Select 锁 统计 数据挖掘 https sql SDN DOM 关联查询 src ACE ORM ip cat 管理 Document 参数 dist Word constant mail 数据库 NSA parse REST example 博客 Action db Oracle Menu 解析 二维码 schema 删除 PL/SQL

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](http://www.liuhaihua.cn/img/qrcode_gzh.jpg)