Diggit:为开发人员的真知灼见挖掘Git仓库

Diggit- Mining git repositories for developer insights

I’m looking for feedback on what to include in the analysis suite of diggit. Please comment on this article, open an issue at github.com/lawrencejones/diggit or contact me directly at[email protected] if you have any ideas about metrics that could provide value to you when reviewing code in your day-to-day. Thanks!

By flagging code smells in review, diggit raises awareness of problems at a point in the development process where code can easily be changed, with an audience of devs who are immediately familiar with program context.

Reaching back into a projects history means comments can be made that reflect patterns of interaction with the code, rather than a shallow analysis of the current state. Tracking how conventional code health measures change with time can enhance their utility, giving developers insights into the projects future health, while they have the power to change it.

Background

I’m a final year student at Imperial College London, studying Computing. diggit is my MEng project, which started as research into how to weaponise software archaeology techniques for the average developer.

This research has led to a tool that fits into a projects continuous integration setup, where like a CI testing provider, I pull down a repo on significant changes, run my analysis and then return the feedback in the form of comments on a GitHub pull request.

What red flags can diggit detect?

As overall project goals, diggit’s stated objectives are to detect when…

- Files show signs of growing complexity

- Changes suggest the current architecture is hindering development

- Past modifications have included changes that are absent in the proposed

The first analysis step that’s implemented is the concept of Refactor Diligence, which fits into goal (1), introduced by Michael Feathers in this article . Here Feathers suggests you can generate an profile of project health by analysing how many methods have been consecutively increased in size over the project history.

[135, 89, 14, 2, 1, 1, 1, 1, 1]

This array tells me that for a particular repository, there are 135 methods that increased in size the last time they were changed, and there are 89 methods that increased in size the last two times they were changed. The last element of the array tells us that a single method has increased in size the last nine times that it was changed. Thankfully, no methods have grown the last ten times they were changed.

https://michaelfeathers.silvrback.com/detecting-refactoring-diligence

This profile is a reduction of quite detailed statistics (really, a long chain of method history sizes back until the first point a method decreased in size) and demonstrates the power of a macro-measurement to express a dataset otherwise too large to comprehend. But how do we take action on this information? Refactor the entire codebase?

If we look at the metric applied to methods of a popular library such as Sinatra , we can immediately see that some stick out more than others…

What if we could receive warnings about these methods when we change them , which is surely when we have the most power to address the problem? It may be that we’re not even aware we’re contributing to a chain of method growth, too focused on the change we’re making at hand to realise that this method has red flags.

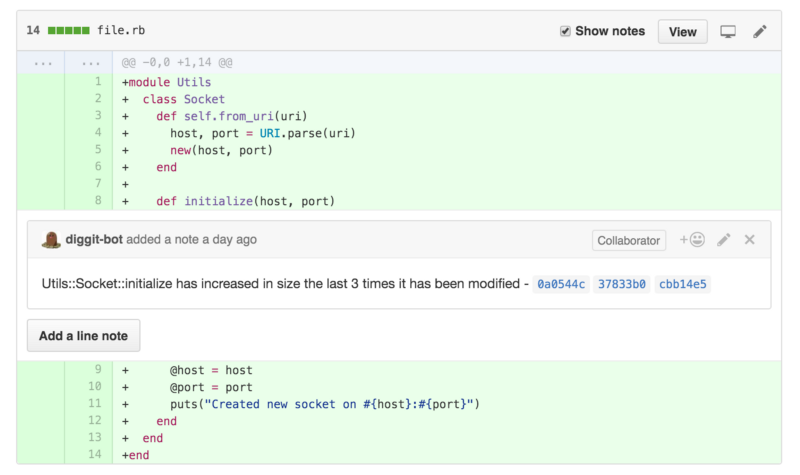

This is where diggit steps in. On the pull request for this unsuspecting change, diggit easily highlights how the change fits in the context of the projects history, highlighting that the area you just touched has been made less robust because of it.

How can you help?

The key challenge for diggit will be finding the analysis metrics that provide real value to developers. No one wants their pull requests spammed with useless information, which is why I need feedback from developers about what diggit can provide that will add to their code reviews. Information that can be gleaned by traversing project history, insights which may not be known by those making the changes.

Over the next couple of weeks I’ll be implementing detection of increasing file complexity, high churn hotspots, problematic temporal coupling . Every new analysis step will start life false-positive adverse, and from there I’ll modify thresholds in response to user feedback. You can sign your projects up by going to https://diggit-repo.com .

Please leave comments on this article about any features you could imagine fitting well within this review setup. I’m open to any ideas and would love to work with people to extract quality insights from project version control history, those of highest utility to real developers!

References

Michael Feathers: Refactoring Diligence inspiration for refactoring diligence analysis metric

http://www.ticosa.org/ for general software archaeology resources

Adam Tornhill: Your code as a crime scene practical applications of software archaeology methods

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)