Building scalable private services with Internal Load Balancing

Cloud load balancers are a key part of building resilient and highly elastic services, allowing you to think less about infrastructure and focus more on your applications. But the applications themselves are evolving: they are becoming highly distributed and made up of multiple tiers. Many of these are delivered as internal-only microservices. That’s why we’re excited to announce the general availability of Internal Load Balancing , a fully-managed flavor of Google Cloud Load Balancing that enables you to build scalable and highly available internal services for internal client instances without requiring the load balancers to be exposed to the Internet.

In the past year, we’ve described our Global Load Balancing and Network Load Balancing technologies in detail at the GCP NEXT and NSDI conferences. More recently, we revealed that GCP customer Niantic deployed HTTP(S) LB , alongside Google Container Engine and other GCP technologies, to scale its wildly popular game Pokémon GO. Today Internal Load Balancing joins our arsenal of cloud load balancers to deliver the scale, performance and availability your private services need.

Internal Load Balancing architecture

When we present Cloud Load Balancing, one of the questions we get is “Where is the load balancer?” and then “How many connections per second can it support?”

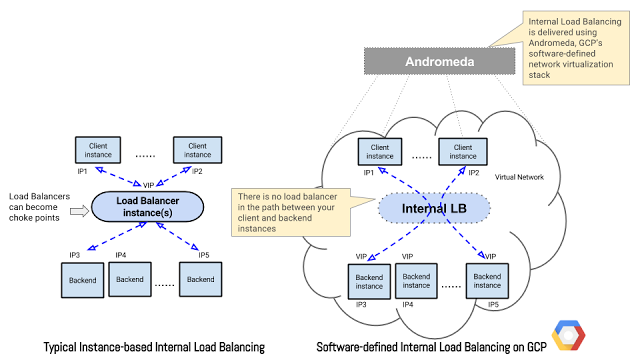

Similar to our HTTP(S) Load Balancer and Network Load Balancer, Internal Load Balancing is neither a hardware appliance nor an instance-based solution. It is software-defined load balancing delivered using Andromeda , Google’s network virtualization stack.

|

| (click to enlarge) |

As a result, your internal load balancer is “everywhere” you need it in your virtual network, but “nowhere” as a choke-point in this network. By virtue of this architecture, Internal Load Balancing can support as many connections per second as needed since there is no load balancer in the path between your client and backend instances.

Internal Load Balancing features

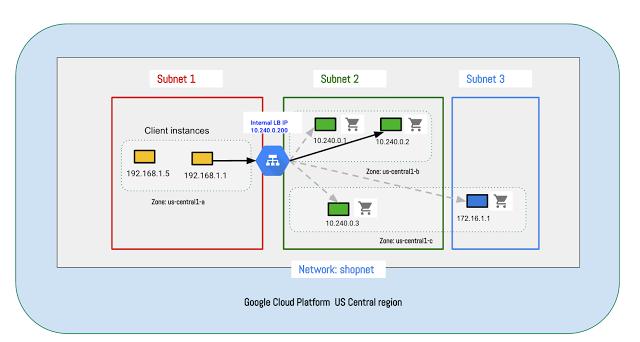

Internal Load Balancing enables you to distribute internal client traffic across backends running private services. In the example below, client instance (192.168.1.1) in Subnet 1 connects to the Internal Load Balancing IP (10.240.0.200) and gets load balanced to a backend instance (10.240.0.2) in Subnet 2.

|

| (click to enlarge) |

With Internal Load Balancing, you can:

- Configure a private RFC1918 load-balancing IP from within your virtual network;

- Load balance across instances in multiple availability zones within a region;

- Configure session affinity to ensure that traffic from a client is load balanced to the same backend instance;

- Configure high-fidelity TCP, SSL(TLS), HTTP or HTTPS health checks;

- Get instant scaling for your backend instances with no pre-warming; and

- Get all the benefits of a fully managed load balancing service. You no longer have to worry about load balancer availability or the load balancer being a choke point.

Configuring Internal Load Balancing

You can configure Internal Load Balancing using the REST API, the gcloud CLI or Google Cloud Console. Click here to learn more about configuring Internal Load Balancing.

The (use) case for Internal Load Balancing

Internal Load Balancing delivers private RFC 1918 load balancing within your virtual private networks in GCP. Let’s walk through three interesting ILB use cases:

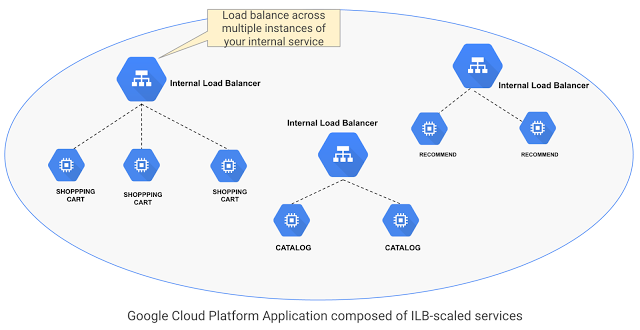

1. Scaling your internal services

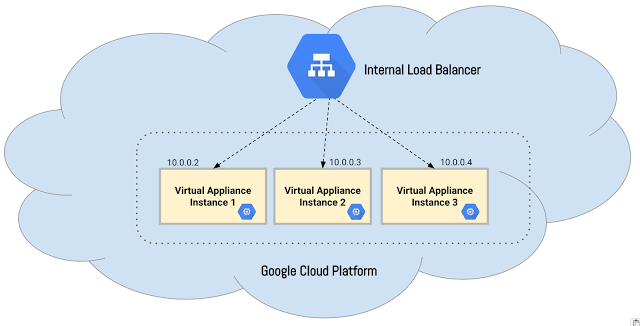

In a typical microservices architecture, you deliver availability and scale for each service by deploying multiple instances of it and using an internal load balancer to distribute traffic across these instances. Internal Load Balancing does this, and also autoscales instances to handle increases in traffic to your service.

|

| (click to enlarge) |

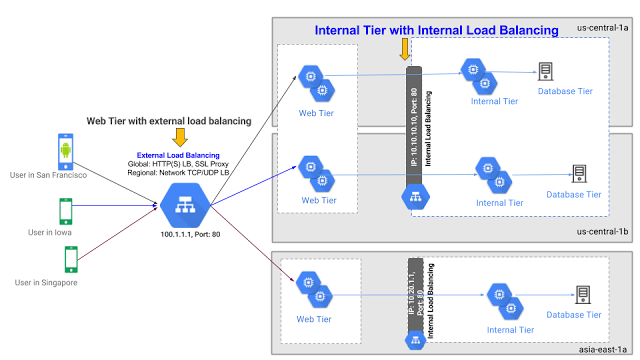

2. Building multi-tier applications on GCP

Internal Load Balancing is a critical component for building n-tier apps. For example, you can deploy HTTP(S) Load Balancing as the global web front-end load balancer across web-tier instances. You can then deploy the application server instances (represented as the Internal Tier below) behind a regional internal load balancer and send requests from your web tier instances to it.

|

| (click to enlarge) |

Traditionally, high availability (HA) for hardware appliances is modeled as an active-standby or active-active set-up where two (or sometimes more) such devices exchange heart beats and state information across a dedicated, physical synchronization link in a Layer 2 network.

This model no longer works for cloud-based virtual appliances such as firewalls, because you do not have access to the physical hardware. Layer 2 based high availability doesn’t work either because public cloud virtual networks are typically Layer 3 networks. More importantly, cloud apps store shared state outside of the application for durability, etc., so it is possible to eliminate traditional session state synchronization.

|

| (click to enlarge) |

Considering all of these factors, a high availability model that works well on Google Cloud Platform is deploying virtual appliance instances behind Internal Load Balancing. Internal Load Balancing performs health checks on your virtual appliance instances, distributes traffic across the healthy ones, and scales the number of instances up or down based on traffic.

What’s next for Internal Load Balancing

We have a number of exciting Internal Load Balancing features coming soon, including service discovery using DNS, load balancing traffic from on-prem clients across a VPN to backends behind an internal load balancer, and support for regional instance groups .

Until then, we hope you will give Internal Load Balancing a spin. Start with the tutorial , read the documentation and deploy it on GCP. We look forward to your feedback !

Source: Building scalable private services with Internal Load Balancing

除非特别声明,此文章内容采用 知识共享署名 3.0 许可,代码示例采用 Apache 2.0 许可。更多细节请查看我们的 服务条款 。

Post Views: 1

- 本文标签: App client scala ip UI CTO build Features mina Region TCP example 文章 db cat 代码 Connection Architect tab tar Google REST http id ssl FIT key VPN IO web DNS API Document src ORM apache https IDE google cloud dist Service rmi struct

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](http://www.liuhaihua.cn/img/qrcode_gzh.jpg)